What is a web crawler: how these data spiders optimize the internet

Crawlers are the reason why search engines like Google, Bing, Yahoo, and DuckDuckGo are always able to deliver new and current search results. Similar to spiders, these bots rove the web collecting information and storing it in indices. So, how are web crawlers used and what are the different kinds out there on the World Wide Web?

What is a web crawler?

Crawlers are bots that search the internet for data. They analyze content and store information in databases and indices to improve search engine performance. They also collect contact and profile data for marketing purposes.

Since crawler bots can move as confidently as a spider through the web with all its branching paths to search for information, they are sometimes referred to as spider bots. They are also referred to as search bots and web crawlers. The very first crawler was called the World Wide Web Wanderer (often shortened to WWW Wanderer) and was based in the programming language Perl. Starting in 1993, the WWW Wanderer measured the growth of the then still young internet and stored the collected data in the first internet index called Wandex.

The WWW Wanderer was then followed in 1994 by the very first browser called WebCrawler, which is today the oldest search engine still in existence. Using crawlers, search engines can maintain their databases by automatically adding new web content and websites to the index, updating them, and deleting content that is no longer accessible.

Crawlers are especially important for search engine optimization (SEO). It is therefore vital that companies familiarize themselves with the different types and functions of web crawlers so that they will be able to offer SEO-optimized content online.

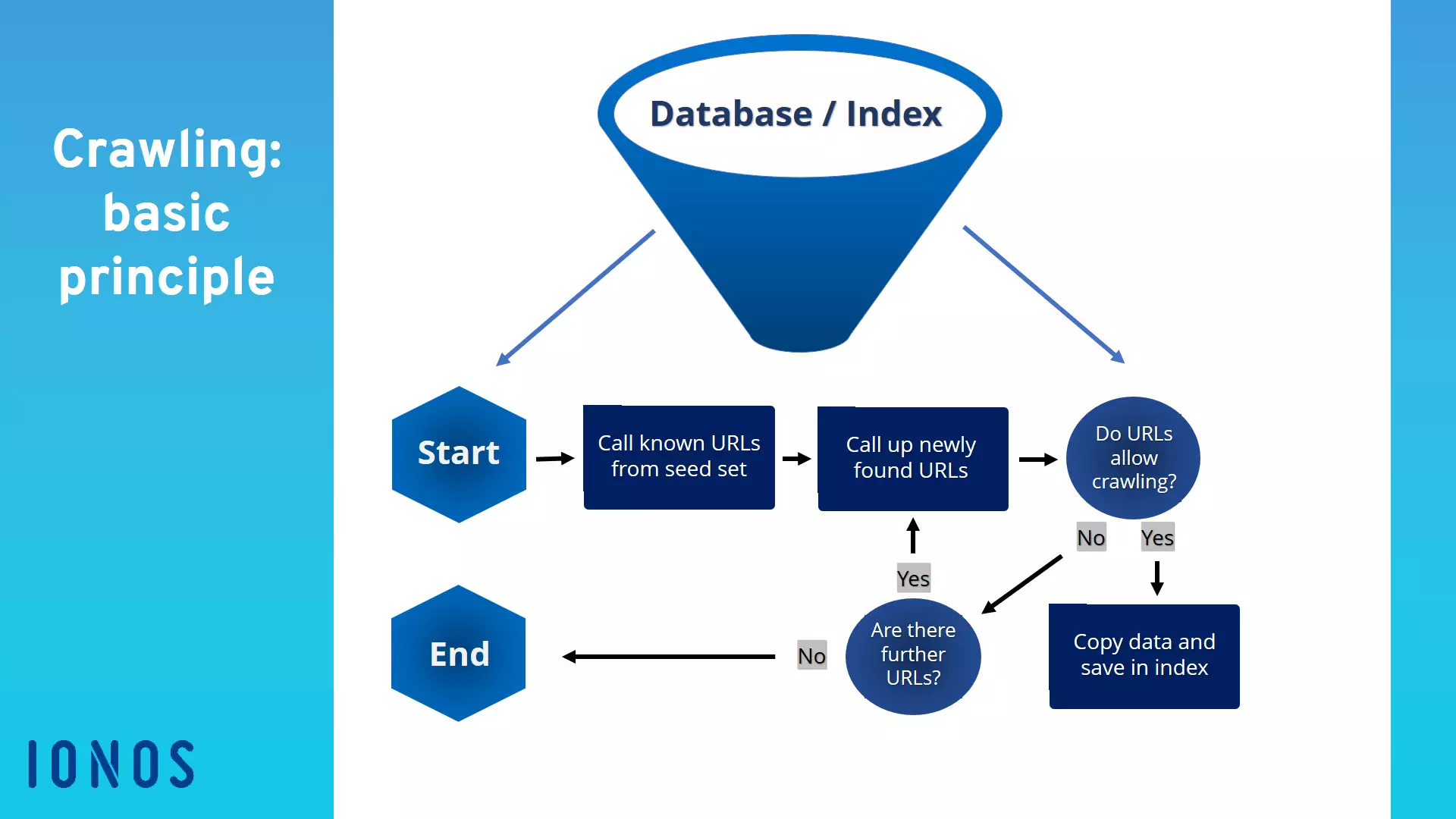

How does a crawler work?

Just like social bots and chatbots, crawlers are also composed of code that includes algorithms and scripts which define clear tasks and commands. They independently and continuously repeat the functions defined in the code.

Crawlers navigate the web via hyperlinks to available websites. They analyze keywords and hashtags, index the content and URLs of each website, copy web pages, and open all or just a selection of the URLs found to analyze new websites. Crawlers also check whether links and HTML files are up to date.

Using special web analysis tools, web crawlers can analyze information such as page views and links, collect data for data mining purposes, and make targeted comparisons (e.g. for comparison portals).

What are the different types of crawlers?

There are several different types of web crawlers that differ in terms of their focus and scope.

Search engine crawlers

The oldest and most common type of web crawler is the search bot. These are created by Google and alternative search engines, such as Yahoo, Bing, and DuckDuckGo. They sift through, collect, and index web content and thus optimize the scope and database of the search engine. The following are the best-known web crawlers:

- Googlebot (Google)

- Bingbot (Bing)

- Slurpbot (Yahoo)

- DuckDuckBot (DuckDuckGo)

- Baiduspider (Baidu)

- Yandex Bot (Yandex)

- Sogou Spider (Sogou)

- Exabot (Exalead)

- Facebot (Facebook)

- Alexa Crawler (Amazon)

Personal website crawlers

These basic crawlers have very simple functions and can be used by individual companies to perform specific tasks. For example, they can be used to monitor how often certain search terms are used or whether specific URLs are accessible.

Commercial website crawlers

Commercial crawlers are complex software solutions offered by companies that sell web crawlers. They offer more services and functions, and they save companies the time and money it would take to develop an in-house crawler.

Cloud website crawlers

There are also website crawlers that store data in the cloud instead of on local servers, which are usually sold commercially by a software company as a service. Since they are not dependent on local computers, their analysis tools and databases can be used from any device with the right login information and their applications can be scaled.

Desktop website crawlers

You can also run basic web crawlers on your own desktop computer or laptop. These crawlers are quite limited and inexpensive, and can usually only analyze small amounts of data and websites.

How do crawlers work in practice?

The specific procedure followed by a web crawler consists of several steps:

- Crawl frontier: Using a data structure called a crawl frontier, search engines determine whether crawlers should explore new URLs via known, indexed websites and links provided in sitemaps or whether they should only crawl specific websites and content.

- Seed set: Crawlers receive a seed set from the search engine or client. A seed set is a list of known or requested web addresses and URLs. The set is based on previous indexing, databases and sitemaps. Crawlers explore the set until they reach loops or dead links.

- Index expansion: The seed analysis allows crawlers to analyze web content and add to the index. They update old content and delete URLs and links from the index that are no longer accessible.

- Crawling frequency: Despite the fact that crawlers are always busy roving the web, programmers can determine how often they visit and analyze URLs. They do so by analyzing page performance, update frequency, and data traffic. Based on this information, programmers define the crawl demand.

- Indexing management: Website administrators can specifically exclude crawlers from visiting their website. This is done using the robots.txt protocol or nofollow HTML tags. When requesting a URL, crawlers will receive instructions to avoid a website or to only analyze data to a limited extent.

What are the advantages of crawlers?

Inexpensive and effective: Web crawlers handle time-consuming and costly analysis tasks and can scan, analyze and index web content faster, cheaper, and more thoroughly than humans.

Easy to use, wide scope: Web crawlers are quick and easy to implement and ensure thorough and continuous data collection and analysis.

Improve your online reputation: Crawlers can be used to optimize your online marketing by expanding and focusing your customer base. They can also be used to improve a company’s online reputation by recording communication patterns found in social media.

Targeted advertising: Data mining and targeted advertising can be used to communicate with specific customer audiences. Websites with a high crawling frequency are ranked higher in search engines and receive more hits.

Analyze company and customer data: Companies can use crawlers to evaluate and analyze customer and company data available online and use this data for their own marketing and corporate strategies.

SEO optimization: By analyzing search terms and keywords, they can define focus keywords, narrow down the competition, and increase page hits.

Additional applications include:

- Continuous system monitoring to find vulnerabilities;

- Archiving old websites;

- Comparing updated websites with their previous versions;

- Detecting and removing dead links;

- Analyzing the keyword search volume; and

- Detecting spelling mistakes and any other incorrect content.

How can the crawling frequency of a website be increased?

If you want your website to be ranked as high as possible in search engines and regularly visited by web crawlers, you should make it as easy as possible for these bots to find your website. Websites with a high crawling frequency get a higher priority in search engines. The following factors are crucial in making your website easier to find for crawlers:

- The website needs to have a variety of redirecting hyperlinks and also be linked on other websites. This means that crawlers will be able to find your website via links and analyze it as a branching node, instead of just a one-way street.

- The website content needs to be kept up to date. This includes content, links, and HTML code.

- You need to ensure that the servers are available.

- The website should have fast load times.

- There should be no duplicate or unnecessary links or content.

- You should make sure that your sitemap, robots.txt, and HTTP response header provide crawlers with important information about your website.

What is the difference between web crawlers and scrapers?

While they are often compared to each other, web crawlers and scrapers are not the same type of bot. Web crawlers are primarily used to search for content, store it in indices and analyze it. Scrapers, on the other hand, are used to extract data from websites via a process called web scraping.

Although there is some overlap between crawlers and scrapers and crawlers often use web scraping to copy and store web content, crawlers’ main functions are to request URLs, analyze content and add new links and URLs to the index.

In contrast, the primary function of scrapers is to visit specific URLs, extract specific data from websites, and store it in databases for later use.