RankBrain: the evolution of the Google algorithm

Google uses a self-learning algorithm component as part of its web search, according to senior research scientist, Greg Corrado, who helped to develop RankBrain. The Google employee revealed to Bloomberg media, on October 15th, 2015, that the search engine market leader uses artificial intelligence (AI) when interpreting user requests. A corresponding system named RankBrain had already been integrated into the search engine’s algorithm months before the interview was published. Google has been investing millions in AI research for years now. As early on as 2012, the search engine provider hired the technical visionary, Raymond Kurzweil, as Director of Engineering. In 2014, the startup DeepMind, which specializes in AI systems, was taken over by Google for more than $500 million. With RankBrain, Google’s efforts in the field of AI research are now entering the company’s core activities. But how smart is the new technology really? And what impact does RankBrain have on the work of website operators and SEO experts?

What is RankBrain?

According to Corrado, RankBrain has been used as part of the superior Google search algorithm 'Hummingbird' since the beginning of 2015 but it’s still limited when it comes to new search queries.

Google receives around 3 billion search requests daily. Around 15% of the user inputs are keywords and word combinations, which Google has never seen before, such as colloquial terms, word creations, or complex long tail phrases.

A long tail keyword is a complex search term that consists of several words up to a complete sentence. The opposite of long tail is short head, which is a short, precise phrase. As a comparison: “how does a cloud server work?” compared to “cloud server”.

In 2016, the use of RankBrain was extended to the entire Google search platform, meaning the technology is now involved in the processing of all queries that Google receives via the web search.

RankBrain’s main task is to interpret keywords and search phrases with the aim of working out what the user is trying to search for. RankBrain has been presented as a self-learning AI system. But what does Google mean when it comes to artificial intelligence? And how does RankBrain work exactly?

Machine Learning: Making Sense of a Messy World (Google via YouTube).

AI research: 60 years of artificial intelligence

A fully autonomous computer and the technological singularity projected by futurists are still science fiction. Instead, AI research has been dealing with the automation of intelligent behavior for around 60 years. Today, there still isn’t really a hard-and-fast, general definition of the term 'artificial intelligence'. The same applies to the concept of intelligence itself.

A conference was responsible for the birth of AI research, which took place at Dartmouth College in the US in 1956. The range of topics at the event, according to the 1955 funding proposal, was the automatic computer, speech syntheses, neural networks, machine learning, as well as abstractions, coincidence, and creativity. All this was summarized by John McCarthy, the person responsible for the organization, by using the previously unknown slogan: Artificial Intelligence. The multi-award winning computer scientist laid the foundations for a new interdisciplinary field, whose research interest he defined in the funding proposal for the Dartmouth Conference as follows:

For the present purpose the artificial intelligence problem is taken to be that of making a machine behave in ways that would be called intelligent if a human were so behaving.

John McCarthy, 1955

A similar definition can be found in the Encyclopaedia Britannica:

Artificial intelligence (AI), the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings.

Encyclopaedia Britannica

Computer pioneer, Alan Turing, suggested an experiment in 1950 to objectively test the intelligence of a machine. In the so-called Turing test, a human subject holds a conversation with two unknown conversation partners using just the keyboard and the screen so there’s no visual or audio contact. One test partner is a person and the other is a machine. The aim of both test partners is to convince the subject that they are conversing with a thinking being. According to Turing, the machine would pass the test if the person couldn’t work out which partner was the machine and which was the real person. To date, the Turing test is considered an insurmountable hurdle. The chatbot, Eugene Goostman, received a lot of media attention. The chatbot was tested in 2014 in a different test setup - it didn’t fare very well. Critics, however, doubt that the test invented by Turing is at all suitable to prove machine intelligence. The experimental setup merely simulates an interpersonal conversation. However, speech ability reflects only one part of human intelligence. In addition, the Turing test exclusively checks whether the signals emanating from the machine can be interpreted as intelligent behavior. There is no question of the presence of intelligence, for example, in the form of intentionality or awareness. However, in practice, this distinction is of secondary importance. The main focus here is on the functionality of AI systems. This practice-oriented approach to AI research is reflected in the computer scientist, Elaine Rich’s definition:

Artificial Intelligence is the study of how to make computers do things at which, at the moment, people are better.

Elaine Rich, 1983

There are even two different concepts of artificial intelligence:

- Hard Artificial Intelligence: according to the hard AI concept, a machine would have to have similar intellectual abilities to the human being in order to be deemed intelligent. In addition to the ability to draw conclusions and solve problems, this concept encompasses concepts such as self-knowledge, self-awareness, sensibility, and wisdom. The goal is to create intelligence.

- Soft Artificial Intelligence: according to this concept, it is sufficient to equip machines with abilities that are associated with intelligent behavior in humans. The goal is therefore to simulate intelligent human behavior such as logical thinking, decision making, planning, learning, and communication using mathematical rules.

When Google talks about a self-learning AI system in terms of RankBrain, the soft AI concept is what is being referred to. It is a technology that finds automatic solutions for problems that previously had to be handled by people. Like most systems of this type, RankBrain also relies on techniques of machine learning.

Machine learning is the artificial generation of knowledge from experience. Machine learning systems analyze large amounts of data, identify patterns, trends and interconnections using mathematical algorithms and end up with independent predictions based on this data. Further information on machine learning systems and their usage possibilities in the context of online marketing and web analysis can be found in the basic article on the subject.

by definition, Rankbrain is artificial intelligence according to the soft AI concept. The system is based on machine learning techniques and are used in the context of the Google search algorithm for interpreting user inputs.

How does RankBrain work?

RankBrain helps Google to interpret user input and find web pages from the Google search index – a database of around 100 million gigabytes – that best suit the user’s search. The AI system goes far beyond just matching search terms.

With the Hummingbird update in August 2013, Google implemented the so-called semantic search. Search terms and word combinations used to be evaluated statically and without context before Hummingbird but the Google algorithm update brought the importance of user input into focus. With RankBrain, Google now supplements the semantic search with a self-learning AI system, which is able to use previously acquired knowledge to answer new and unique search queries.

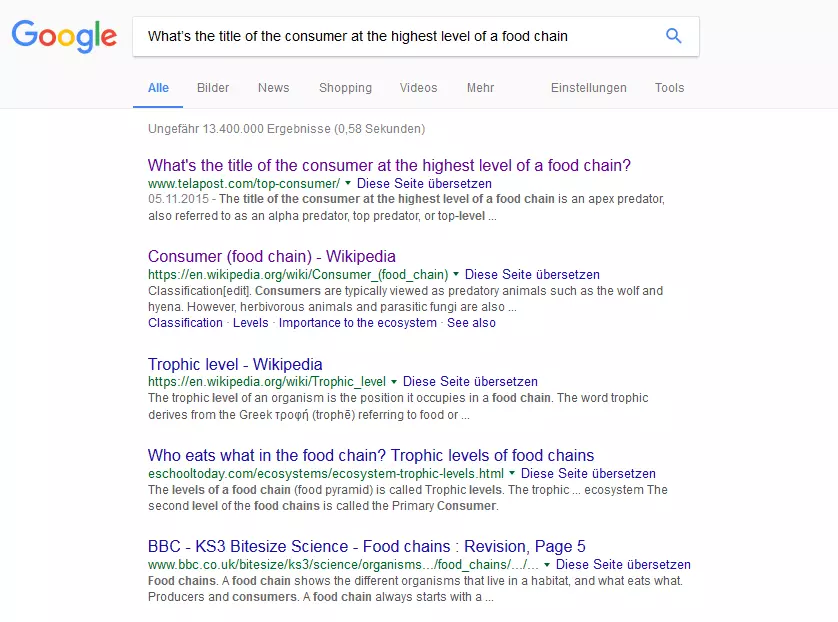

To demonstrate a usage example for RankBrain, Bloomberg gave Google the following search term:

'What’s the title of the consumer at the highest level of a food chain'

Instead of analyzing each individual word independently, RankBrain captures the semantics of the total user input and therefore determines the intentions of the searcher. Despite the long tail phrase, the search can expect a quick response.

Since RankBrain is a machine learning system, it relies on its experience with previous search queries, establishes links, makes predictions about what the user is looking for, and how best to answer their question. It’s necessary to solve ambiguities and to develop the meaning of previously unknown terms (e.g., neologisms). Google won’t reveal, however, how the AI system mastered this challenge. SEO experts suggest that RankBrain uses word vectors to transfer search queries into a form that allows computers to interpret the meaning. In 2013, Google released its open-source machine learning software, Word2Vec, which can be used to translate, measure, and compare semantic relations between words in a mathematical representation. This analysis is based on linguistic text corpora. In the first step, Word2Vec creates an n-dimensional vector space, in which each word of the underlying text body ('training data') is represented as a vector in order to 'learn' the context between words. N is the number of vector dimensions in which a word is to be displayed. The more dimensions that are chosen for the word vectors, the more relations the program is able to register in relation to other words. In the second step, the created vector space is fed into an artificial neural network (KNN) that enables it to be adapted by means of a learning algorithm. This means that words that are used in the same context also form a similar word vector. The similarity between word vectors is calculated by the so-called cosine distance as a value between -1 and +1. In short: if you give Word2Vec an arbitrary text corpus as input, the program delivers corresponding word vectors as output. These enable the semantic proximity of the words to be assessed that are contained in the corpus. If Word2Vec is confronted with new input, the learning algorithm enables the program to adapt the vector space and therefore create new meanings or reject old assumptions: the neural network is 'trained'.

Using artificial neural networks (KNN), AI researchers are trying to simulate the organization and processing principles of the human brain. The aim is to develop systems that are able to deal with vagueness problems and also to take on tasks that have been previously carried out by humans. Neural networks by Google are used in the context of automatic image recognition.

Google doesn’t officially make a connection between the way that Word2Vec works and the RankBrain search algorithm component but it is assumed that the AI system relies on similar mathematical operations.

RankBrain and search engine optimization (SEO)

What’s even more surprising than the announcement that Google’s research results in the area of artificial intelligence are incorporated into the web search, is the frequency to which it occurs. Not only has Google being able to interpret all search requests from RankBrain since 2016; according to Corrado, the self-learning AI system is the third most important ranking factor in the Google algorithm.

According to Google, RankBrain functions as the third most important ranking factor in web search. Positions 1 and 2 are shared by content and backlink factors, according to Google’s Search Quality Senior Strategist Andrey Lipattsev.

For website operators and SEO experts, the focus is on keyword strategies. As a semantic search engine, Google is able to access background knowledge in the form of concepts and relationships in order to determine the meaning of texts and search queries. Whether or not a website is good for a particular search depends less on whether it contains the search term, but rather whether the website’s (text) content is relevant to the particular concept that RankBrain connects to the search term. The focus is not the keyword itself, but the website’s content relevance. Searchmetrics also came to this conclusion. Searchmetrics is a software company that is present on the international SEO market with a search and content marketing platform. Since 2012, it has published a highly respected series of studies on the central ranking factors of the Google algorithm Classic checklist SEO is a thing of the past, according to the core message of the current study from 2016. Under the title 'Ranking factors according to Searchmetrics', Searchmetrics is studying the latest developments at Google. The conclusion: general ranking factors are no longer appropriate to the current state of the search engine’s development. Instead, web operators should concentrate on content relevance and user focus. However, the demands on a website vary greatly depending on the sector and the industry. The company, therefore, announces that it will present future branch-specific research results.

Thanks to RankBrain, content relevance and user intention are the focus of search engine optimization.

In order to examine the link between a website’s ranking and its relevance for the respective search term, Searchmetrics also used word embedding software in its 2016 study. This software displays semantic relationships in the form of vectors. Out of a set of 10,000 keywords, the company identified the content-relevance of the first 20 search results for each search term, excluding the keyword. For this purpose, the analysts deleted the respective search terms from the texts of the ranking websites and determined a relevance score of 0 to 100 for the rest of the content. This was then correlated with the position on the search results page.

The findings: websites at the top of Google’s search results were significantly more content-relevant than sites that were worse off in the results. The highest content relevance was found by Searchmetrics for websites on positions 3 to 6. It should be noted here than positions 1 and 2 are occupied by many search terms from company websites which, according to Searchmetrics, also benefit from being well-known brands in the Google ranking.

Even the best content will only reach the top of Google’s rankings if the sound technical base already exists. Successful sites are equally accessible to humans and machines. The main factors are page loading time and file size as well as a website’s URL structure and internal linking. Since Google’s mobile-friendly update, a mobile-friendly page structure is also part of the basic technical requirements that constitute a website’s success.

The question still remains: how should website operators react to RankBrain and other Google developments? Searchmetrics reveals that holistic text design is a central success factor. What is meant by this is creating a text that is not based on keywords but rather on a topic. The focus is, therefore, on the user. The goal is to answer search queries on Google by providing relevant content. For this purpose, website operators should determine the intention of the search for all of the keywords that they wish to rank. Only by doing this, can search terms be structured and joined with topics that serve as a basis for an editorial plan and the creation of readable, content-rich texts. You can find out more about how to deal with search terms in our guide on keyword search, analysis, and strategy.