How to create and apply a Kubernetes load balancer

To work as efficiently as possible with Kubernetes, it is important that the workload is distributed manageably across different pods. A load balancer is an excellent solution for this in the container management software.

The ideal platform for demanding, highly scalable container applications. Managed Kubernetes works with many cloud-native solutions and includes 24/7 expert support.

What is a load balancer in Kubernetes?

A load balancer is used to distribute the load that servers or virtual machines have to process in the most efficient manner possible. In this way, overall performance can be improved. Normally, a load balancer is placed upstream of the servers to prevent individual servers being overloaded. This also ensures optimal use of the available resources. Even when a server fails, the load balancer guarantees an up-and-running system by redirecting requests in a targeted manner.

Kubernetes load balancers work a little differently, but with the same foundational idea. However, a distinction should be made between two different load balancer types in Kubernetes:

- Internal Kubernetes load balancers

- External Kubernetes load balancers

Internal Kubernetes load balancers

We’ll take a quick look at internal Kubernetes load balancers for the sake of topic completeness, but external ones will be the focus of the article. Internal load balancers take a different approach than classic load balancers. These ensure that only applications running on the same virtual network as your Kubernetes cluster can access it.

External Kubernetes load balancers

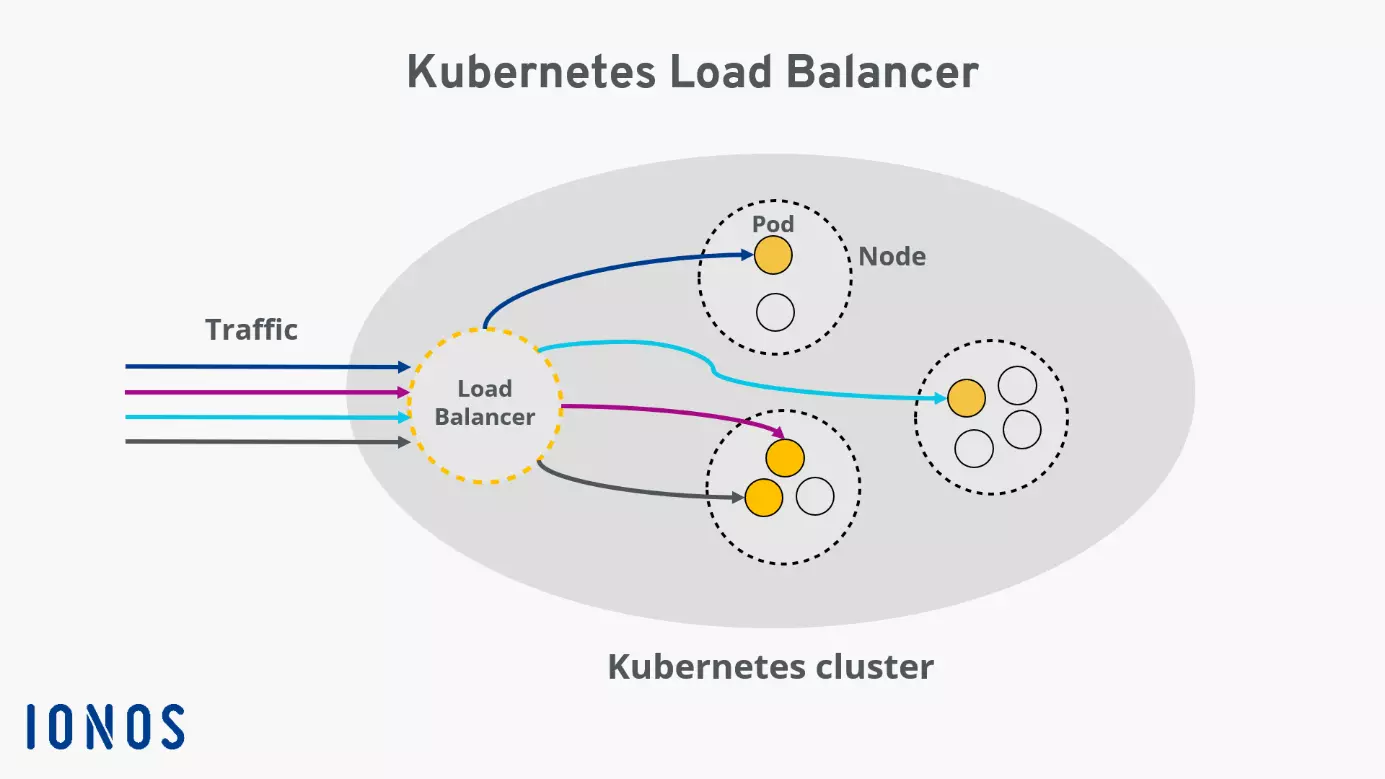

External load balancers assign a service node of a Kubernetes cluster to its own IP addresses or DNS name so that it can receive external HTTP requests. The load balancer is a special Kubernetes service type. It is designed to route external traffic to individual pods in your cluster, ensuring the best distribution of incoming requests.

There are several algorithms to configure load balancers in Kubernetes. Which one you choose depends entirely on your intended use. The different algorithms mainly determine the principle according to which the load balancer processes incoming traffic.

Not using Kubernetes yet? IONOS Managed Kubernetes allows you to deploy your container applications to Kubernetes and benefit from the extensive advantages of the container management software! Our Kubernetes tutorial can help you get started with Kubernetes.

How is a Kubernetes load balancer useful?

A Kubernetes load balancer defines a service running in the cluster that is accessible via the public internet. It helps to take a look at the Kubernetes architecture to understand this. A cluster comprises several nodes, which in turn contain several pods. Each pod in the cluster is assigned an internal IP that cannot be accessed from outside the cluster. This makes sense because pods are automatically created and removed, and the IPs are reassigned.

A Kubernetes service is usually required to make the software running in the pods usable at a fixed address. In addition to the load balancer, there are other types of service that are suitable for various application scenarios. What all service types have in common is that they combine a set of pods into a logical unit and describe how they are accessed:

“a Service is an abstraction which defines a logical set of Pods and a policy by which to access them”

– Source: https://kubernetes.io/docs/concepts/services-networking/service/

A Kubernetes load balancer is intended to provide optimal distribution of external traffic to the pods in your Kubernetes cluster. This means that load balancers have a vast range of applicable situations — they’re intended for just about any purpose. Since Kubernetes load balancers can target traffic to individual pods, high availability of your cluster is guaranteed. As soon as a pod is not functioning or has errors, the load balancer ensures that the tasks are distributed to the other pods.

Scalability is also improved by using load balancers. Kubernetes can automatically create or delete pods as needed. So, if it is determined that incoming traffic requires fewer or more resources than are currently available, Kubernetes can automatically respond to this circumstance.

Here’s how to create a load balancer for Kubernetes

To create a Kubernetes load balancer, you need to have your cluster running in a cloud or an environment that supports configuring external load balancers.

With IONOS, a static IP is assigned to a node in the cluster when a Kubernetes load balancer is created. Under this IP, the service can be addressed from outside the cluster. The Kube proxy running on the node distributes incoming traffic intelligently to the individual pods.

First, create a service, then assign the load balancer service type to it using the following line:

type: LoadBalancerThe configuration of a Kubernetes load balancer could look like this, for example: The service groups pods under the selector web-app. Incoming traffic under the load balancer IP on port 8080 is distributed to the individual pods. The service running on each pod on port 80 is addressed:

apiVersion: v1

kind: Service

spec:

selector:

app: web-app

type: LoadBalancer

loadBalancerIP: 203.0.113.0

ports:

- name: http

port: 8080

targetPort: 80

protocol: TCPAnother way to create a Kubernetes load balancer is to use the kubectl command line.

Using the command:

kubectl expose deployment test --target-port=9376 \ --name=test-service –type=LoadBalanceryou create and deploy a new service named test-service that will act as a load balancer.

If you want to find out the IP address of the service you just created, the following command will help you:

kubectl describe services test-service