What is CRI-O?

CRI-O is an implementation of the Container Runtime Interface (CRI) for Kubernetes, using “Open Container Initiative” (OCI) images and runtime environments. The project was launched in 2016 by the company Red Hat and handed over to the “Cloud Native Computing Foundation” (CNCF) in spring 2019.

The ideal platform for demanding, highly scalable container applications. Managed Kubernetes works with many cloud-native solutions and includes 24/7 expert support.

How does CRI-O work?

To understand how CRI-O works and how it interacts with related technologies, it is worth looking at the historical development of container-based virtualization. The basis for its creation was the Docker software which made the virtualization of individual apps based on lightweight containers mainstream. Beforehand, virtualization was primarily understood as the use of virtual machines. A virtual machine contains an entire operating system, whereas several containers access a shared operating system kernel.

From Docker to Kubernetes to CRI-O

A container usually contains a single app which often provides a micro-service. In practical use, several containers are usually controlled together to implement an application. The coordinated management of entire groups of containers is known as orchestration.

Even if orchestration with Docker and tools like Docker Swarm is feasible, Kubernetes has prevailed as an alternative to Docker. Kubernetes combines several containers in a so-called pod. The pods in turn run on so-called nodes – these can be both physical and virtual machines.

One of the main problems with Docker was its monolithic architecture. The Docker daemon ran with root rights and was responsible for a multitude of different tasks: from downloading the container images to executing them in the runtime environment to creating new images. This merging of independent areas violates the software development principle “Separation of concerns” and leads to security issues in practice. Therefore, efforts were made to decouple the individual components.

When Kubernetes was released, the Kubernetes daemon kubelet contained a hard-coded Docker runtime environment. However, the need to support other runtimes soon became apparent. Modularization of the individual aspects promised a simplified development and higher security. To make various runtimes compatible with Kubernetes, an interface was defined: the Container Runtime Interface (CRI). CRI-O is a specific implementation of this interface.

Make use of Kubernetes today – with Managed Kubernetes from IONOS.

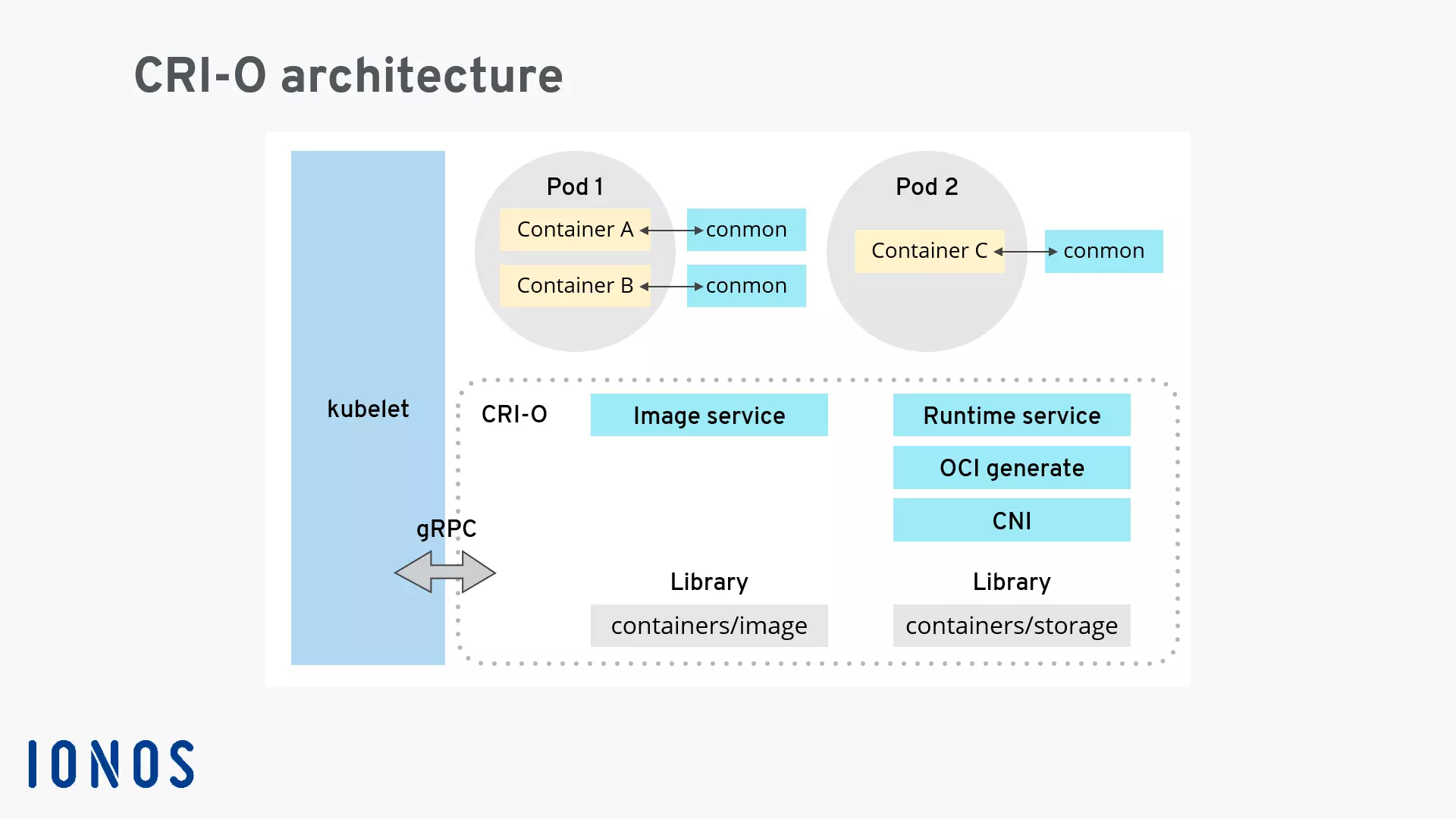

Architecture and function of CRI-O

The following components are part of CRI-O:

- The software library containers/image to download container images from various online sources.

- The software library containers/storage to manage container layers and create the file system for container pods.

- An OCI-compatible runtime to run the container; the standard runtime is runC, but other OCI-compatible runtimes like Kata Containers can be used.

- The container networking interface (CNI) used to create the network for a pod; plugins for Flannel, Weave and OpenShift-SDN are used.

- The container monitoring tool conmon to continuously monitor the container.

CRI-O is often used in conjunction with the pod management tool Podman. This works because Podman relies on the same libraries for downloading the container images and managing the container layers as CRI-O.

In principle, using CRI-O consists of the following steps:

- Download an OCI container image

- Extract the image into an OCI runtime file system bundle

- Execution of the container by an OCI runtime

When is CRI-O being used?

Currently, CRI-O is primarily used as part of Red Hat’s OpenShift product line. OpenShift implementations exist for cloud platforms from all major providers. Furthermore, the software can be operated as part of the OpenShift Container Platform in public or private data centers. Here is an overview of the various OpenShift products:

| Product | Infrastructure | Managed by | Supported by |

|---|---|---|---|

| Red Hat OpenShift Dedicated | AWS, Google Cloud | Red Hat | Red Hat |

| Microsoft Azure Red Hat OpenShift | Microsoft Azure | Red Hat and Microsoft | Red Hat and Microsoft |

| Amazon Red Hat OpenShift | AWS | Red Hat and AWS | Red Hat and AWS |

| Red Hat OpenShift on IBM Cloud | IBM Cloud | IBM | Red Hat and IBM |

| Red Hat OpenShift Online | Red Hat | Red Hat | Red Hat |

| Red Hat OpenShift Container Platform | Private Cloud, Public Cloud, Physical machine, virtual machine, Edge | Kunde | Red Hat, others |

| Red Hat OpenShift Kubernetes Engine | Private Cloud, Public Cloud, Physical machine, virtual machine, Edge | Kunde | Red Hat, others |

What differentiates CRI-O from other Runtimes?

CRI-O is a relatively new development in container virtualization. Historically, there are several alternative container runtimes. Perhaps the most unique selling point of CRI-O is its singular focus on Kubernetes as the environment. With CRI-O, Kubernetes can execute containers directly without additional tools or special code adjustments. CRI-O directly supports the existing, OCI-compatible runtimes. Here is an overview of actively developed and frequently used runtimes:

| Runtime | Type | Description |

|---|---|---|

| runC | Low-level OCI Runtime | De facto standard runtime that emerged from Docker and is written in Go |

| crun | Low-level OCI Runtime | High-performance runtime; implemented in C instead of Go |

| Kata Containers | Virtualized OCI Runtime | Uses light-weight virtual machine (VM) |

| containerd | High-level CRI Runtime | Uses standard runC |

| CRI-O | Light-weight CRI Runtime | Can use runC, crun, Kata Containers, among others |