Fog computing

IoT, the internet of things, has been transforming IT landscapes all across the world and is already seen as a key technology for many future-facing projects. Traditional IoT architectures, where data is centrally collected and processed, are unable to infinitely scale due to limitations such as bandwidth. In the field of fog computing, possible solutions are being developed to address such issues associated with implementing IoT.

- Cost-effective vCPUs and powerful dedicated cores

- Flexibility with no minimum contract

- 24/7 expert support included

What is fog computing? A definition

Fog computing is a cloud technology in which data generated by end devices doesn’t load directly into the cloud but is instead preprocessed in decentralized mini data centers. The concept involves a network structure that extends from the network’s outer perimeter (where data is generated by IoT devices) to a central data endpoint in a public cloud or to a private data center (private cloud).

The aim of “fogging” is to shorten communication distances and reduce data transmission through external networks. Fog nodes form an intermediate layer in the network where it is decided which data is processed locally and which is forwarded to the cloud or to a central data center for further analysis or processing.

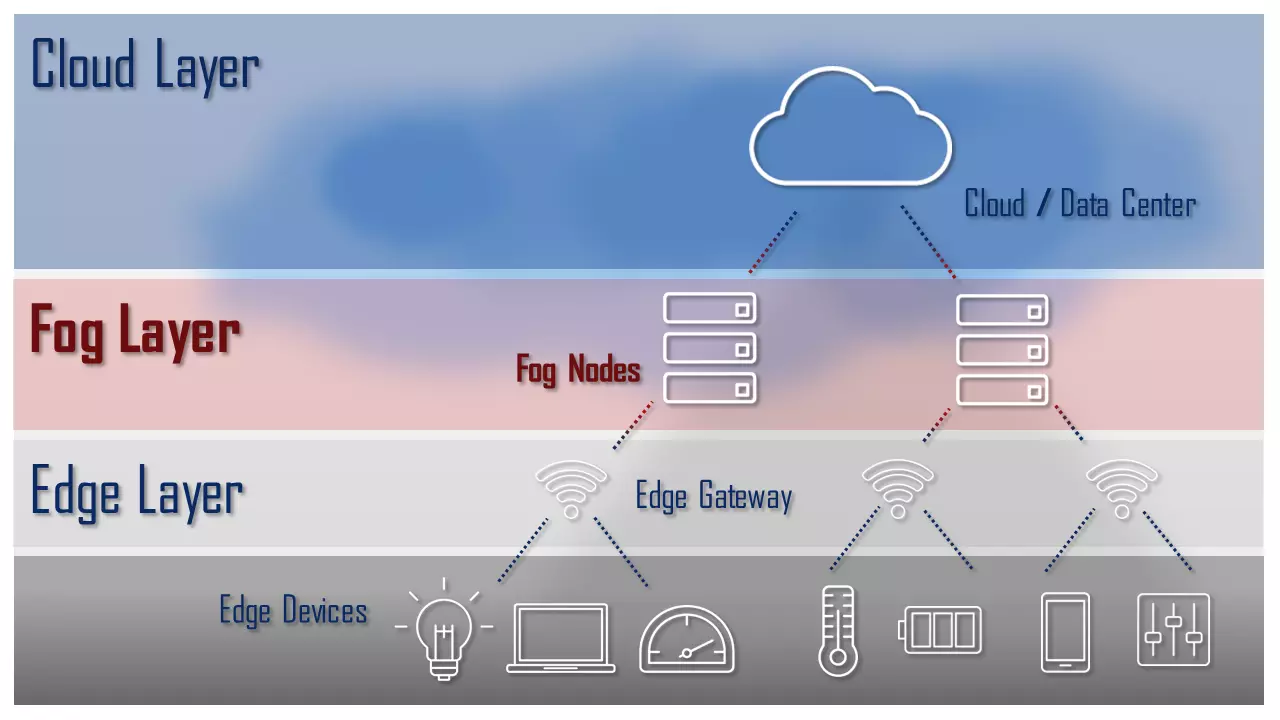

The following schematic illustration shows the three layers of fog computing architecture:

- Edge layer: The edge layer includes all of an IoT architecture’s “smart” devices (edge devices). Data generated from the edge layer is either processed on the device directly or transmitted to a server (fog node) in the fog layer.

- Fog layer: The fog layer includes a number of powerful servers that receive data from the edge layer, preprocessing and uploading it to the cloud as needed.

- Cloud layer: The cloud layer is the central data endpoint of a fog computing architecture.

A reference architecture for fog systems was developed by the OpenFog Consortium (now Industry IoT Consortium (IIC)). You can find more white papers on fog computing on the IIC website.

How is fog computing different from cloud computing?

What sets fog and cloud computing apart is the provision of resources and how data is processed. Cloud computing usually takes place in centralized data centers. Resources such as processing power and storage are bundled by backend servers and made available to clients through the network. Communication between two or more end devices always takes place via a server in the background.

Systems like the ones used in smart manufacturing require data to be continuously exchanged between countless end devices, pushing such an architecture beyond its limits. Fog computing makes use of intermediate processing close to the data source in order to reduce data throughput to the data center.

How is fog computing different from edge computing?

It’s not only the data throughput of large-scale IoT architectures that pushes cloud computing to its limits though. Another problem is latency. Centralized data processing is always associated with a time delay due to long transmission paths. End devices and sensors have to communicate with each other through the server in the data center, resulting in a delay in the external processing of the request as well as the response. Such latency times become problematic in IoT-supported production processes where real-time information processing is a must for machines to react immediately when an incident occurs.

One solution to the latency problem is edge computing, a concept within the framework of fog computing in which data processing is not only decentralized but takes place directly in the end device at the edge of the network. Each smart device is equipped with its own micro-controller, enabling basic data processing and communication with other IoT devices and sensors. This not only reduces latency but also the data throughput at the central data center.

While fog computing and edge computing are closely related, they are not the same thing. The crucial difference lies in where and when the data is processed. With edge computing, data is processed where it is generated, and in most cases, the data is sent immediately after it’s processed. In contrast, fog computing collects and processes raw data from multiple sources in a data center that is located between the data source and a centralized data center. Processing the data in this way makes it possible to avoid forwarding irrelevant data or results to the central data center. Whether edge computing, fog computing or a combination of both is the best depends heavily on the individual use case.

What are the advantages of fog computing?

Fog computing offers solutions to a variety of problems associated with cloud-based IT infrastructures. It prioritizes short communication paths and keeps uploading to the cloud to a minimum. Here are the most important advantages:

- Less network traffic: Fog computing reduces traffic between IoT devices and the cloud.

- Cost savings through use of third-party networks: Network providers bear high costs for high-speed upload to the cloud. Fog computing reduces these.

- Offline availability: In a fog computing architecture, IoT devices are also available offline.

- Less latency: Fog computing shortens communication paths, accelerating automated analysis and decision-making processes.

- Data security: In fogging, device data is often preprocessed by the local network. This enables an implementation where sensitive data can remain within the company or be encrypted or anonymized before being uploaded to the cloud.

What are the disadvantages of fog computing?

Decentralized processing in mini data centers also comes with its own set of disadvantages. The main disadvantages are the cost and complexity of maintaining and managing a distributed system. The disadvantages of fog computing systems are:

- Higher hardware costs: Fog computing requires that IoT devices and sensors be equipped with additional processing units to enable local data processing and device-to-device communication.

- Increased maintenance requirements: Decentralized data processing requires more maintenance, since processing and storage locations are distributed across the entire network and, unlike cloud solutions, can’t be maintained or administered centrally.

- Additional network security requirements: Fog computing is vulnerable to man-in-the-middle attacks.