What is Apache Hive and when is it used?

Although many software experts see the data warehouse system Apache Hive starting to disappear, it’s still regularly used to manage large data sets. Many Apache Hive features can also be found in its successors. For this reason, it’s worth taking a closer look at Hive and its most important uses.

What is Apache Hive?

Apache Hive is a scalable extension to the Apache server and the Apache Hadoop memory architecture. In Hadoop architectures, complex computing tasks are broken down into small processes and at the same time distributed on computer clusters from nodes using clustering. This allows large amounts of data to be processed with standard architectures such as servers and computers. In doing so, Apache Hive is an integrated query and analysis system for your data warehouse on an open source basis. You can analyze, query and summarize data using HiveQL, a similar database language to SQL thanks to Hive. Thanks to this, Hadoop data is made available even for large user groups.

With Hive you use syntax that is similar to SQL:1999 to structure programs, applications and databases or integrate scripts. Before Hive came along, you needed to understand Java programming and programming processes to use the data query program Hadoop. We can thank Hive for making queries easy to translate into the database system. This could, for example, be MapReduce jobs. It’s also possible to integrate other SQL-based applications in the Hadoop framework using Hive. Due to SQL being so widespread, Hive being a Hadoop expansion makes it easier for non-experts to work with databases and large amounts of data.

How does Hive work?

Before Apache Hive expanded the Hadoop framework, the Hadoop ecosystem still used the MapReduce framework developed by Google. In the case of Hadoop 1 this was still implemented as a standalone engine to manage, monitor and control resources and computing processes directly in the framework. This in turn required knowledge of Java to successfully query Hadoop files.

The primary Hadoop functions to use and manage large data sets can be summarized as follows:

- Data summaries

- Queries

- Analysis

The way Hive works is based on a simple principle, which is the use of an interface similar to SQL to translate queries and analyze Hadoop files with HiveQL in MapReduce, Spark or Tez jobs. To do this Hive organizes data from the Hadoop framework into HDFS-compatible table formats. HDFS is short for Hadoop Distributed File System. Targeted data queries can then be carried out via specific clusters and nodes in the Hadoop system. It also has standard features such as filters, aggregations and joins.

Hive based on Schema-on-Read

Unlike relational databases, which work on the SoW (Schema-on-Write) principle, Hive is based on the SoR (Schema-on-Read) principle. This means that data in the Hadoop framework is stored first and foremost unedited and not saved in a pre-defined schema. Only when a Hive query is first sent, will the data be assigned to a schema. One of the advantages of this lies primarily in cloud computing by offering more scalability, flexibility and quicker load times for the databases distributed across clusters.

How to work with data in Hive

To query and analyze data with Hive you would use Apache Hive tables in accordance with a Schema-on-Read principle. You use Hive to organize and sort data in these tables in small, detailed or large, general units. These Hive tables are separated in “buckets”, in other words data sets. To access the data, you use HiveQL, a database language similar to SQL. Hive tables can be written over, and attached as well as serialized in databases, among other things. For this, each Hive table has its own HDFS directory.

Keep your database under control with Linux hosting from IONOS – with flexible, scalable performance, SSL, DDoS protection and secure servers.

Hive’s most important features

Hive’s key features include querying and analyzing large amounts of data and data sets which are saved as Hadoop files in a Hadoop framework. A second primary task carried out by Hive is to translate queries in HiveQL in MapReduce, Sparks and Tez jobs.

Here’s a summary of other important Hive functions:

- Saving meta data in relational database management systems

- Using compressed data in Hadoop systems

- UDFs (user defined functions) for data processing and data mining

- Support for memory types such as RCFile, Text or HBase

- Using MapReduce and ETL support

What is HiveQL?

When talking about Hive, you’ll often hear the term “similar to SQL”. This refers to the Hive database language HiveQL which is based on SQL, however, it isn’t 100% the same as the standards such as SQL-92. HiveQL can, therefore, be considered as a type of SQL or MySQL dialect. Despite all other similarities, the languages do differ in some essential respects. This means HiveQL doesn’t support many SQL features for transactions or subqueries, only partially. On the other hand, it has its own expansions such as multitable inserts offering better scalability and performance in the Hadoop framework. The Apache Hive Compiler translates HiveQL queries in MapReduce, Tez and Spark.

Use a dedicated server with powerful Intel or AMD processors and save on your own IT with a managed server from IONOS.

Data security and Apache Hive

By integrating Apache Hive in Hadoop systems you can also benefit from the authentication service Kerberos. This gives you reliable and mutual authentication and verification between servers and users. Since HDFS specifies permissions for new Hive files, authorizing users and groups is up to you. Another important safety aspect is that Hive offers the recovery of critical workflows in case you need it.

What are the benefits of Apache Hive?

If you’re working with large amounts of data in cloud computing or in the case of Big Data as a Service Hive offers many useful features such as:

- Ad-hoc queries

- Data analysis

- The creation of tables and partitions

- Support for logical, relational and arithmetic links

- The monitoring and checking of transactions

- Day end reports

- The loading of query results in HDFS directories

- The transfer of table data to local directories

The main benefits of this include:

- Qualitive findings on large amounts of data, e.g. for data mining and machine learning

- Optimized scalability, cost efficiency and expandability for large Hadoop frameworks

- Segmentation of user circles due to clickstream analysis

- No deep knowledge of Java programming processes required thanks to HiveQL

- Competitive advantages due to faster, scalable reaction times and performance

- Allowing you to save hundreds of petabytes of data as well as up to 100,000 data queries per hour without high-end infrastructure

- Better resource loads and quicker computing and loading times depending on the workload thanks to virtualization abilities

- Good, error-proof data security thanks to improved emergency restoration options and the Kerberos authentication service

- An increase in data entry since there is no need to adapt data for internal databases (Hive reads and analyzes data without any manual format changes)

- Works under the open source principle

What are the disadvantages of Apache Hive?

One of the disadvantages of Apache Hive is the fact that there are already many successors to it which offer better performance. Experts consider hive to be less relevant when managing and using databases.

Other disadvantages include:

- No real time data access

- Complex processing and updating of data sets with the Hadoop framework with MapReduce

- Latency, meaning it’s much slower than competing systems

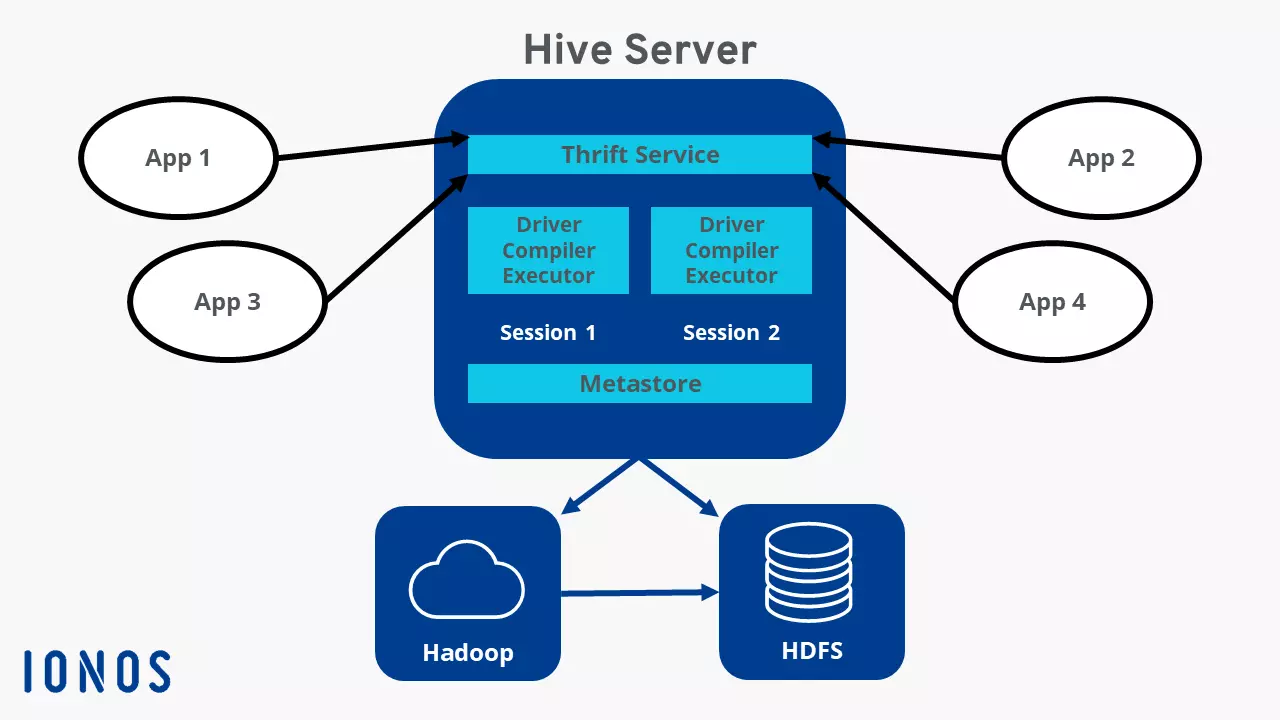

An overview of the Hive architecture

The most important components of the Hive architecture include:

- Metastore: The central Hive storage location containing all data and information such as table definitions, schema and directory locations as well as metadata on partitions in the RDBMS format

- Driver: HiveQL accepts commands and processes them with the Compiler (collecting information), Optimizer (setting the optimal processing methods) and Executor (executing the task)

- Command Line + User Interface: Interface for external users

- Thrift Server: Allows external clients to communicate with Hive and allows protocols similar to JDBC and ODBC to integrate and communicate through the network

How did Apache Hive come about?

Apache Hive aims to make it easier for users without deep-seated SQL knowledge to work with petabytes of data. It was developed by the founders Joydeep Sen Sharma and Ashish Thusoo, who developed Hive in 2007 when developing Facebook’s Hadoop framework. With hundreds of petabytes of data, it is one of the biggest in the world. In 2008 Facebook gave the open source community access to Hive and in February 2015 version 1.0 was published.